Moderating a WordPress site can be a rewarding yet challenging experience—especially when faced with a surge of malicious or abusive behavior from anonymized accounts. These users often exploit privacy-oriented tools such as VPNs, proxy servers, or email masking services to conceal their identities, making it difficult to detect harmful intentions without crossing into ethically or legally grey areas.

TLDR

To manage anonymous abuse on a WordPress site without violating privacy laws, site moderators must strike a delicate balance between vigilance and user rights. Use behavioral patterns, moderation plugins, and server-side tools to analyze activity without collecting intrusive personal data. Blocking should target behavior, not identity, and remain GDPR and CCPA compliant. Transparency and user appeals processes are critical for ethical enforcement.

Understanding the Nature of Anonymous Abuse

Anonymity on the web serves a valuable purpose—protecting users’ identities in sensitive communities or allowing freedom of expression. However, bad actors often exploit this to spam, harass, or spread misinformation undetected. WordPress site owners and moderators have a responsibility to maintain a safe and constructive environment, but must do so legally and respectfully.

Step-by-Step Guide for Moderators

1. Identify Common Indicators of Abusive Activity

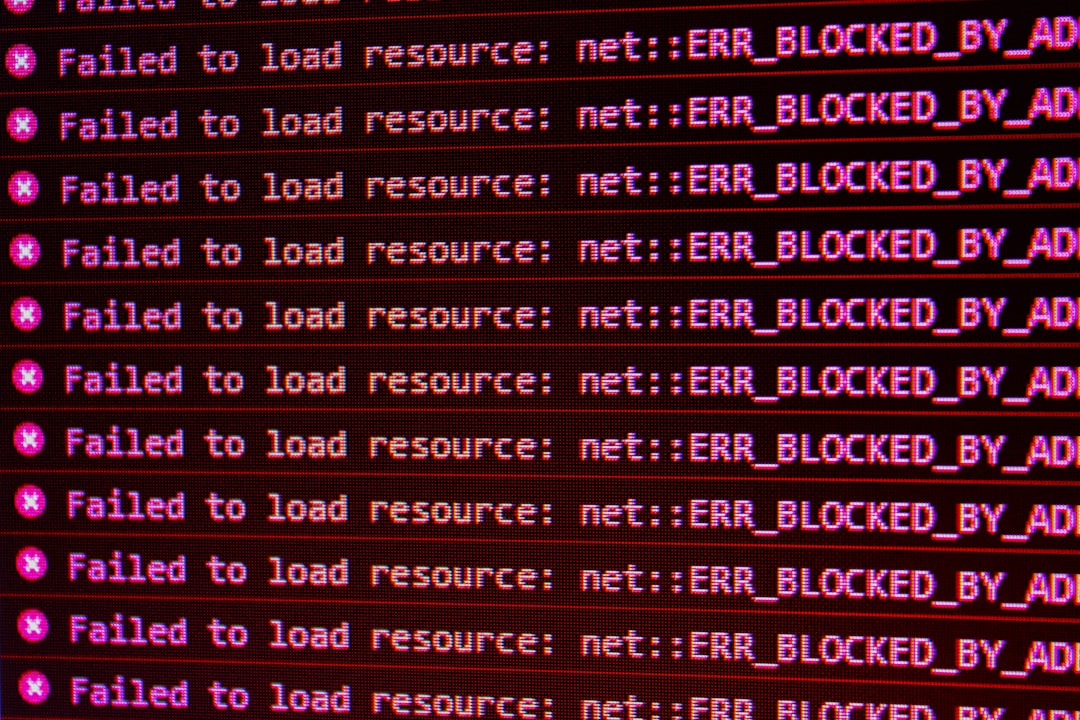

Since traditional user-identification strategies like IP logging or device fingerprinting may conflict with privacy regulations like the GDPR or CCPA, moderators need to focus on behavior instead:

- High volume of posts/comments within a short period

- Repetitive content across multiple pages or threads

- Unusual language patterns or obscene language flagged by filters

- Unusual location patterns (rapid IP changes may indicate VPN use)

2. Use WordPress Moderation Plugins

Some plugins are specifically built to detect spammy or abusive behavior without breaching privacy rules:

- Akismet Anti-Spam: Excellent at identifying spammy content submitted via forms or comments

- WPBruiser: Works without CAPTCHA and offers security without user tracking

- Human Presence: Uses behavioral analysis to detect bots and fake interactions

These tools don’t store personally identifying information but instead rely on analytics and pattern recognition.

3. Implement Comment and Account Validation Controls

While allowing total anonymity increases openness, you can reduce the chance of abuse without collecting private data by taking the following steps:

- Require users to confirm their email (via a link or code)

- Use CAPTCHA or time-based validation on comment forms

- Throttle user comments from the same browser session/IP range

4. Monitor Server Logs Respectfully

Although IP addresses are considered personal data under GDPR, you can still use aggregated or anonymized server logs to analyze suspicious traffic patterns. Here’s how:

- Rotate logs frequently and do not store full IPs—truncate or hash them

- Use abnormal request frequency or target pages to detect abuse attempts

- Cross-reference with behavioral patterns, not personal identifiers

This allows moderators to pick up on coordinated attacks or scraping tools without violating user privacy.

5. Create an Internal Scoring System

Design a system that scores user behavior based on ethics-focused metrics—not identity:

- Assign points for activities like mass-linking, all-caps shouting, rapid replies, etc.

- Set thresholds that temporarily suspend or hide content for manual review

- Maintain transparency by allowing users to know how their content is rated

This gently nudges users toward better behavior without any incriminating surveillance or tracking.

6. Content Flagging and Community Moderation

Empower your community to help. Enable flagging features so that members can report concerning content. WordPress plugins like User Submitted Posts or WP Flag Comment offer such options. Community-driven moderation works as an early-warning system and keeps control decentralized.

7. Use Bot-Detection APIs Responsibly

APIs like Google’s reCAPTCHA v3 or Cloudflare Bot Management incorporate bot identification without exposing personally identifiable information (PII). Choose tools that offer adjustable thresholds and avoid collecting full IP addresses for long-term storage.

8. Respect Privacy Laws: GDPR, CCPA, and Beyond

Your practices must meet the following legal standards:

- GDPR (EU): Avoid collecting more data than necessary. Always inform users if their behavioral data is analyzed.

- CCPA (California): Must allow users to opt out of behavior tracking and have access to data you hold (if any).

- General Best Practice: Don’t store raw identifying data without consent, and purge logs regularly.

9. Establish a Transparent Appeals Process

Sometimes even good users may be mistakenly flagged due to automated systems. Maintain ethical control by offering flagged users a chance to:

- Request a manual review of their suspended comment or post

- Contact moderators privately instead of through public threads

- Receive explanations (general, not personalized) for content blocks

10. Whitelist Genuine Anonymous Users

Not all anonymous users are harmful. Over time, certain accounts or behavior profiles may demonstrate reliability. These can be automatically ‘whitelisted’ or allowed more flexibility, reducing false positives and improving user experience without compromising the site’s integrity.

Frequently Asked Questions (FAQ)

Q1: Can I legally block users by IP in the EU?

A: Yes, but with limitations. IP addresses are considered personal data under GDPR. If you process or store them, you must provide disclosure via a privacy policy and have legal justification. Aggregated or anonymized IP use is safer.

Q2: How do I detect VPN users without violating privacy?

A: You should avoid blacklisting all VPN users unless they demonstrate abuse. Instead, observe behavior flagged by plugins or throttle actions from certain locations. VPN usage alone is not indicative of abuse.

Q3: What should I include in my site’s privacy policy?

A: Clearly outline any data processing done via analytics, moderation, or anti-spam tools. Mention that IP addresses may be processed anonymously for moderation consistency. Offer a way for users to request data deletion.

Q4: Are behavioral scoring systems ethical?

A: Yes—when designed transparently. Scoring systems should be based on content and actions, not on identity. Letting users appeal or contest scores ensures fairness.

Q5: How often should I review moderation rules?

A: Ideally every 3–6 months. Trends and tactics from abusive users change frequently, so staying up to date helps prevent exploits and also ensures your tools comply with updated regulations.

Balancing privacy with effective moderation takes thoughtful strategy, but it is achievable. With the right blend of tools, transparency, and user engagement, WordPress site moderators can keep their communities open, secure, and respectful for all users—anonymous or not.

- Laaster vs Traditional Cloud Solutions: Why Real‑Time Architecture Wins In 2026 - February 11, 2026

- Exclusive Quintessential England Travel Planner: London, Cotswolds & Hidden Gems - February 11, 2026

- Malware vs. Virus Explained For Everyday Users: Key Differences, Detection & Protection Tips - February 11, 2026