Is Chat GPT Safe?: Data Security Analysis

Artificial Intelligence (AI) tools have become an integral part of modern life, reshaping industries, streamlining workflows, and enhancing user experiences. Among the most widely recognized AI technologies today is OpenAI’s ChatGPT—a conversational AI designed to respond to human queries, generate texts, and offer interactive dialogue experiences. While its capabilities are impressive, a central question arises: Is ChatGPT safe in terms of data security? This article delivers a detailed analysis of ChatGPT’s data security measures, potential vulnerabilities, and best practices for users.

TLDR (Too long, didn’t read):

ChatGPT is generally safe to use thanks to strong security measures implemented by OpenAI, including encryption, red teaming, and model training safety guidelines. However, users must remain cautious about sharing personal or sensitive data, as the model does not have memory in typical conversations but may still present privacy risks if not properly managed. Moreover, third-party integrations and enterprise solutions may vary in their safety practices. Bottom line: use responsibly and stay informed.

Understanding How ChatGPT Works

Before diving into data security, it’s essential to understand how ChatGPT operates. ChatGPT is a language model built on OpenAI’s GPT (Generative Pre-trained Transformer) technology. It generates responses based on patterns it has learned from a broad dataset during training, which includes internet text, books, forums, and other publicly available sources. Its capabilities include:

- Answering questions

- Writing essays, scripts, and emails

- Translating languages

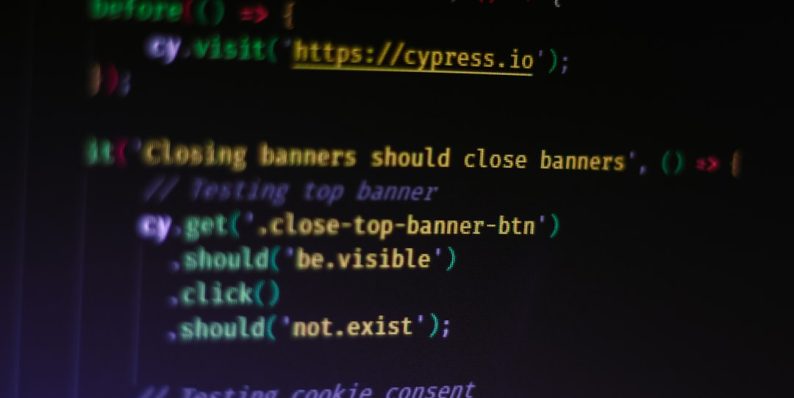

- Generating code

- Summarizing articles

However, the model does not have real-time access to personal user data unless that data is explicitly provided during a session. This is one of the first layers of protection that helps safeguard user privacy.

What Happens to the Data You Enter?

OpenAI states that it may use interactions with ChatGPT to improve system performance and accuracy. For most users, especially those using the free version, this means that the content you enter could potentially be stored, analyzed, and reviewed by moderators under controlled circumstances.

On the other hand, subscribers to the ChatGPT Plus and enterprise plans have access to enhanced security configurations. Specifically:

- ChatGPT Plus: Inputs may still be reviewed unless the user opts out through their data controls.

- ChatGPT Enterprise: Inputs and outputs are not used for training models, offering a privacy-first approach for corporate clients.

Nonetheless, OpenAI reminds users to avoid entering any sensitive or personally identifiable information (PII) during chats. While the model doesn’t retain memory in a traditional sense outside specific memory-enabled contexts, cautious input behavior remains paramount.

Security Measures Implemented by OpenAI

OpenAI has implemented several robust protocols and practices to maintain the integrity and confidentiality of user interactions. These include:

- Data Encryption: All user inputs and outputs are encrypted both at rest and in transit, using standard secure protocols (e.g., HTTPS and AES-256 encryption).

- Red Teaming and Testing: Internal and external security teams conduct red teaming simulations to identify vulnerabilities and test system resilience.

- Differential Privacy: Some versions of ChatGPT incorporate techniques to abstract personally identifiable information, reducing its exposure during analysis.

- Role-based Access Control (RBAC): Only authorized personnel under strict confidentiality agreements can access user data if needed for quality assurance or abuse investigation.

These controls provide a baseline of safety, but they’re not foolproof. Like any digitally connected tool, ChatGPT is only as secure as its weakest link—including how users interact with it.

Potential Risks and Limitations

Despite the strong safeguards, utilizing ChatGPT involves certain inherent risks:

1. Exposure of Sensitive Data

If users inadvertently share personal, financial, medical, or confidential corporate data, it could be exposed to internal scrutiny or become part of data reviews used to fine-tune the model. Although OpenAI does not deliberately extract or misuse such information, accidental data entry poses a genuine threat.

2. Misuse by Threat Actors

Cybercriminals may misuse ChatGPT for purposes such as phishing campaign generation, coding malware scripts, or engineering deceptive content. While filters and blockers are active within the model, no system is entirely immune to circumvention attempts.

3. Third-party Integrations

ChatGPT is increasingly being integrated into third-party platforms (e.g., browsers, software tools, or mobile apps). The security standards of these platforms can vary, creating potential vulnerabilities if they’re not managed securely.

How Memory Feature Affects Data Safety

OpenAI has introduced a memory feature in ChatGPT that allows the model to retain certain user preferences and frequently used data across sessions. While this improves functionality and personalization, it introduces an additional layer of privacy concern.

Users have control over this feature and can:

- Review what is stored in memory

- Delete memory entries selectively

- Disable the memory feature entirely

Note: As of now, default settings typically keep the memory turned off unless the user opts in. It’s essential that users remain aware of when memory is activated and manage it according to personal privacy needs.

Image not found in postmeta

Best Practices for Safe Use of ChatGPT

For individuals and enterprises alike, the following best practices can help minimize potential security risks when using ChatGPT:

- Avoid sharing personal, financial, or secure login data in your conversations.

- Use enterprise-grade versions if handling sensitive information; benefit from stronger security agreements.

- Regularly review app permissions if integrating ChatGPT into external platforms or plugins.

- Opt out of data contribution when using default versions, especially if you are concerned about privacy.

- Keep informed about updates from OpenAI regarding data usage policies and security changes.

Ethical and Legal Implications

With increasingly strict regulations such as the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the U.S., users and companies must also consider the regulatory implications of using ChatGPT. These include:

- Consent Collection: Ensure users are notified if AI is used in interactions, particularly in customer service and HR processes.

- Data Retention: Define and enforce policies on how long chatbot-generated data is retained and how it’s disposed of.

- Audit Capability: Regular audits to verify that no sensitive information is being inadvertently stored or accessed.

OpenAI complies with these frameworks to a high extent, but organizations integrating ChatGPT bear shared responsibility in ethical and legal dimensions.

Conclusion: Is ChatGPT Safe?

Yes—ChatGPT, under most circumstances, is safe to use. OpenAI continues to invest heavily in security measures, privacy protocols, and ethical oversight. However, this safety is conditional upon responsible use and proper implementation.

ChatGPT does not possess real-time access to databases, does not autonomously store personal data outside agreed contexts, and offers transparency about how data is used. Still, like any tool interfacing with the vast landscape of human information, it is susceptible to misuse and must be treated with caution.

The safest way forward? Stay informed, leverage enterprise solutions for critical use cases, and always think twice before typing anything sensitive into the chatbox.

- HP Laptop Won’t Turn On After RAM Upgrade? 7 Fixes to Get Your HP Laptop Booting Again - February 18, 2026

- Remote Desktop Error 0x204 Explained: Why It Happens and How to Fix RDP Connection Issues on Windows - February 17, 2026

- How to Fix “Error at Hooking API LoadStringA” in Rainbow Six Siege (Easy Anti-Cheat Solutions) - February 17, 2026

Where Should We Send

Your WordPress Deals & Discounts?

Subscribe to Our Newsletter and Get Your First Deal Delivered Instant to Your Email Inbox.